(2026–2028) PIONEER — Neuromorphic Active Vision for Embodied Object Perception

GACR Standard Projects / CTU

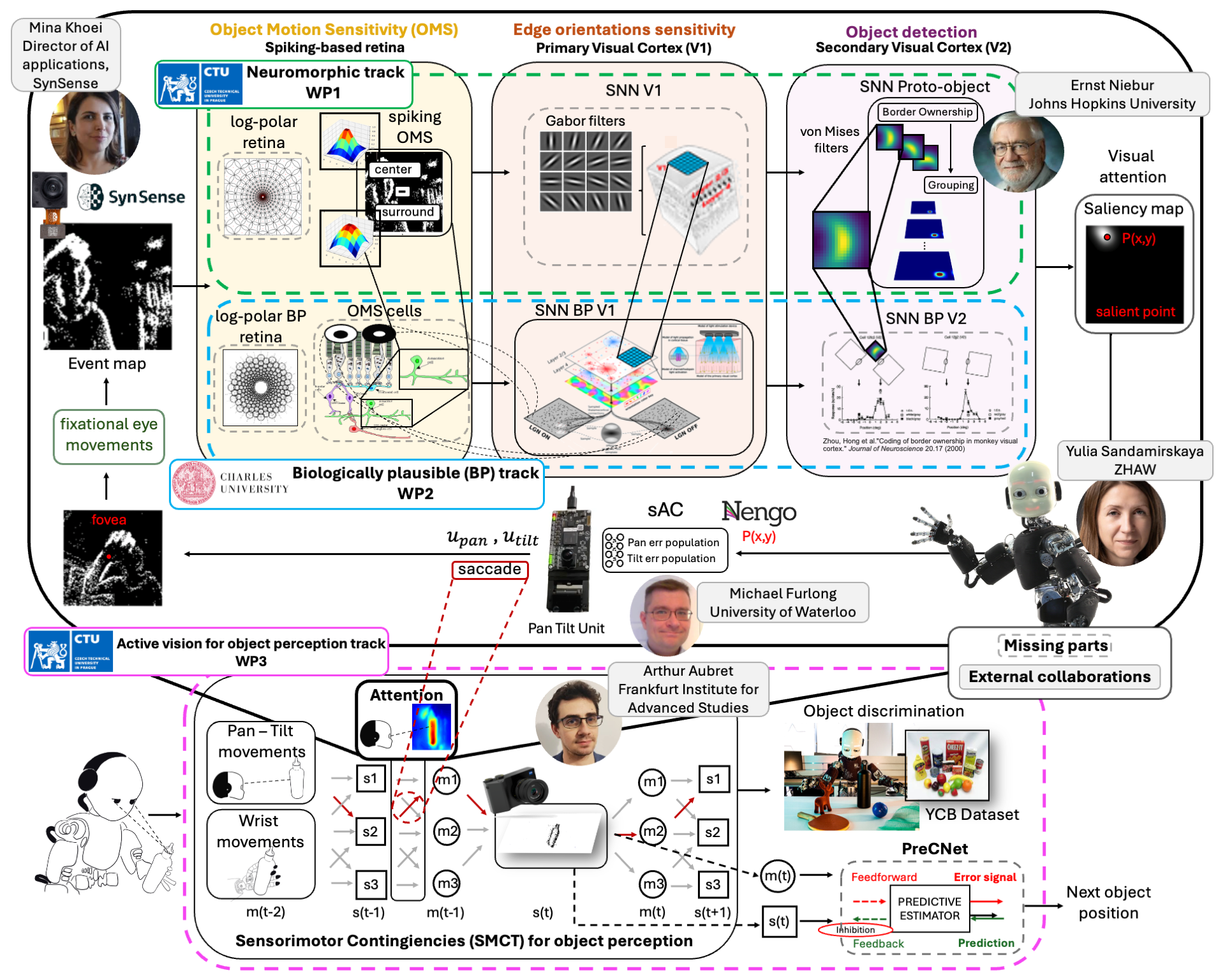

Overview: PIONEER seeks to decode biological active sensing principles, leveraging low-power and low-latency neuromorphic sensing and computing to develop an intelligent system that optimizes visual information processing. The project develops a fully neuromorphic end-to-end active vision architecture, assesses biological realism in neuromorphic vision, and integrates active embodied object perception in humanoid robotics using the iCub platform.

Work Packages:

- WP1 – Neuromorphic Track: Develop integrated fully neuromorphic end-to-end active vision architecture with log-polar retina, V1 edge extraction, and OMS cells for motion detection using Speck neuromorphic hardware.

- WP2 – Biologically Plausible Track: Explore benefits of incorporating greater biological realism by replacing cortical stages with biologically detailed recurrent SNN models of primate V1 and V2, determining optimal abstraction level for robotics.

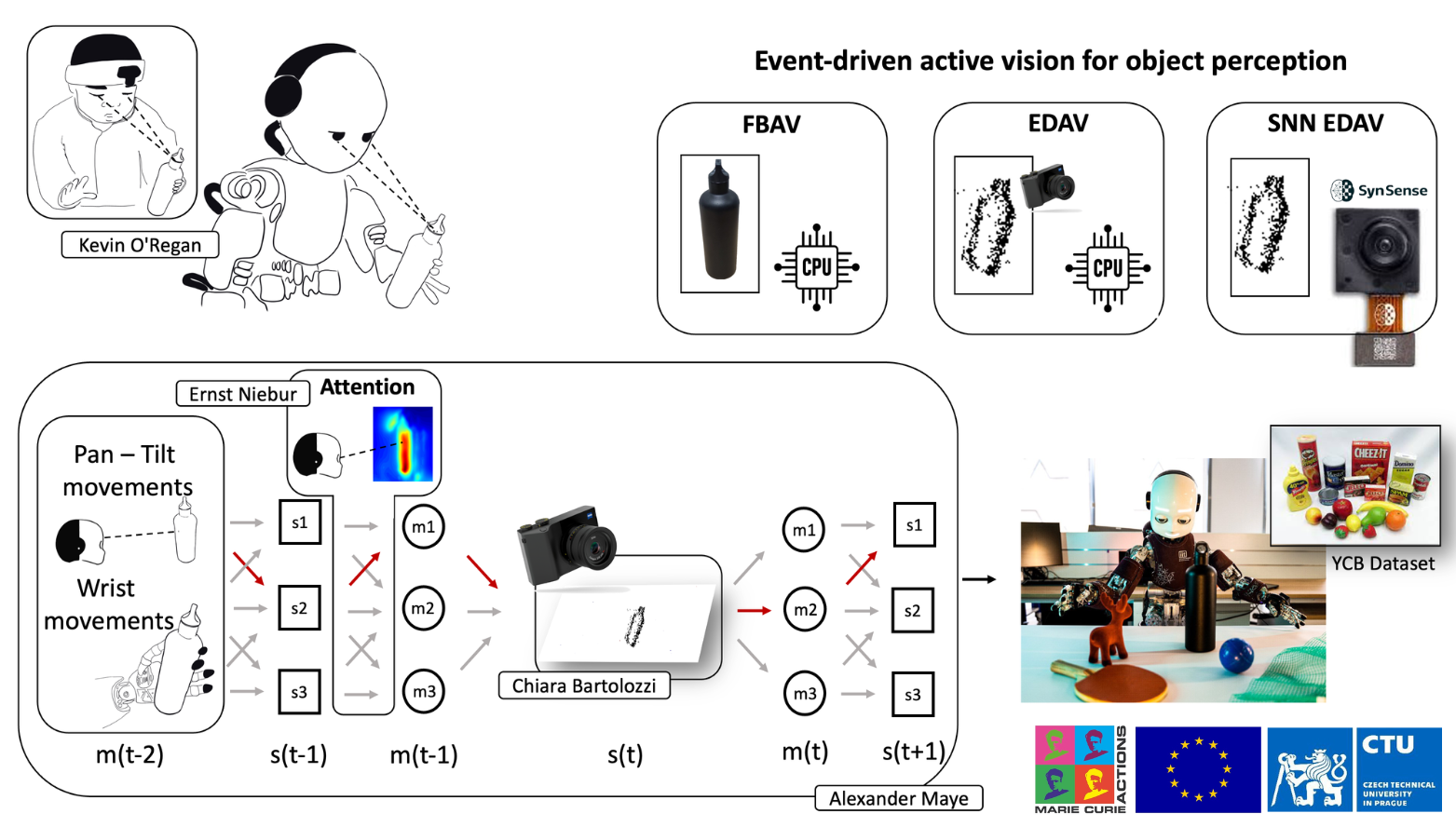

- WP3 – Active Vision for Object Perception: Integrate Sensorimotor Contingency Theory (SMCT) rules for embodied object perception through saccadic and wrist movements, enabling object discrimination and motion prediction on iCub robot.

Technologies: Speck neuromorphic platform with DYNAP-CNN chip, DAVIS346 COLOR event-driven cameras, iCub humanoid robot with 3D-printed camera mounts, pan-and-tilt unit (PTU), spiking neural networks, YCB object dataset.

Impact: PIONEER advances low-latency, energy-efficient processing by implementing a fully active neuromorphic system for object perception. The project bridges neuroscience, robotics, and AI, supporting EU Sustainable Development Goals by developing low-power intelligent systems. It establishes new benchmarks for neuromorphic robotic vision and offers alternatives to conventional feedforward computer vision approaches that rely on large datasets.